Children as young as nine have been added to malicious WhatsApp groups promoting self-harm, sexual violence and racism, a BBC investigation has found.

Thousands of parents with children at schools across Tyneside have been sent a warning issued by Northumbria Police.

One parent, who we are calling Mandy to protect her child’s identity, said her 12-year-old daughter had viewed sexual images, racism and swearing that “no child should be seeing”.

WhatsApp owner Meta said all users had “options to control who can add them to groups”, and the ability to block and report unknown numbers.

Schools said pupils in Years 5 and 6 were being added to the groups, and one head discovered 40 children in one year group were involved.

The BBC has seen screenshots from one chat which included images of mutilated bodies.

Mandy said it took some coaxing, but eventually her daughter showed her some of the messages in one group, which she found had 900 members.

“I immediately removed her from the group but the damage may already have been done,” she said.

“I felt sick to my stomach – I find it absolutely terrifying.

“She’s only 12, and now I’m worried about her using her phone.”

Warnings were sent to parents via schools

Northumbria Police said it was investigating a “report of malicious communications” involving inappropriate content aimed at young people.

“We would encourage people to take an interest in their children’s use of social media and report any concerns to police,” a spokesperson said.

‘Playing on their mind’

The WhatsApp messaging app has more than two billion users worldwide.

It recently reduced its minimum age in the UK and Europe from 16 to 13, while the NSPCC said children under 16 should not be using it.

The charity said experiences like Mandy’s daughter’s were not unusual.

Senior officer for children’s safety online, Rani Govender, said content promoting suicide or self-harm could be devastating and exacerbate existing mental health issues.

“It can impact their sleep, their anxiety, it can make them just not feel like themselves and really play on their mind afterwards,” she added.

Getty ImagesWhatsApp recently reduced its minimum age in the UK and Europe from 16 to 13

Getty ImagesWhatsApp recently reduced its minimum age in the UK and Europe from 16 to 13

Groups promoting harmful content on social media have featured in high-profile cases, including the death of Molly Russell in 2017.

An inquest concluded the 14-year-old ended her life while suffering from depression, with the “negative effects of online content” a contributing factor.

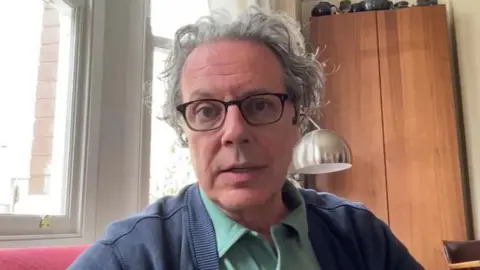

Her father, Ian Russell, said it was “really disturbing” there was a WhatsApp group targeting such young children.

He added the platform’s end-to-end encryption made the situation more difficult.

“The social media platforms themselves don’t know the kinds of messages they’re conveying and that makes it different from most social media harm,” he said.

“If the platforms don’t know and the rest of the world don’t know, how are we going to make it safe?”

PA MediaMolly Russell took her own life after struggling with images of self-harm

PA MediaMolly Russell took her own life after struggling with images of self-harm

Prime Minister Rishi Sunak told the BBC that, as a father of two children, he believed it was “imperative that we keep them safe online”.

He said the Online Safety Act was “one of the first anywhere in the world” and would be a step towards that goal.

“What it does is give the regulator really tough new powers to make sure that the big social media companies are protecting our children from this type of material,” he said.

“They shouldn’t be seeing it, particularly things like self-harm, and if they don’t comply with the guidelines that the regulator puts down there will be in for very significant fines, because like any parent we want our kids to be growing up safely, out playing in fields or online.”

- If you have been affected by any of the issues raised in this story you can visit BBC Action Line.

Mr Russell said he had doubts about whether the Online Safety Act will give the regulator enough powers to intervene to protect children on messaging apps.

It was “particularly concerning that even if children leave the group, they can continue to be contacted by other members of the group, prolonging the potential danger”, he said.

He urged parents to talk to even very young children about how to spot danger and to tell a trusted adult if they see something disturbing.

Ian Russell, father of Molly Russell, now runs the Molly Rose Foundation

Mandy said her daughter been contacted online by a stranger even after deleting the chat.

“She also told me a boy had called her – as a result of getting her number from the group – and had invited ‘his cousin’ to talk to her too,” she said.

“Thankfully she was savvy enough to end the call and reply to their text messages saying she was not prepared to give them her surname or tell them where she went to school. ”

‘Profits after safety’

Mr Russell said parents should never underestimate what even young children are capable of sharing online.

“When we first saw the harmful content that Molly had been exposed to before her death we were horrified,” he added.

He said he did not think global platforms would either carry such content or allow their algorithms to recommend it.

“We thought the platforms would take that content down, but they just wrote back to us that it didn’t infringe their community guidelines and therefore the content would be left up,” he said.

“It’s well over six years since Molly died and too little has changed.

“The corporate culture at these platforms has to change; profits must come after safety.”

61 comments

You made some good points there. I did a search on the subject and found most people will agree with your site.

I love the efforts you have put in this, thank you for all the great posts.

Regards for helping out, superb info. “Nobody can be exactly like me. Sometimes even I have trouble doing it.” by Tallulah Bankhead.

Greetings from Ohio! I’m bored to tears at work so I decided to check out your blog on my iphone during lunch break. I love the information you present here and can’t wait to take a look when I get home. I’m amazed at how fast your blog loaded on my mobile .. I’m not even using WIFI, just 3G .. Anyways, good blog!

I have been checking out some of your stories and i can claim clever stuff. I will make sure to bookmark your site.

of course like your web-site but you need to check the spelling on quite a few of your posts. A number of them are rife with spelling problems and I find it very bothersome to tell the truth nevertheless I will certainly come back again.

Very efficiently written article. It will be useful to everyone who utilizes it, as well as yours truly :). Keep up the good work – looking forward to more posts.

You are a very capable person!

An impressive share, I just given this onto a colleague who was doing a little analysis on this. And he in fact bought me breakfast because I found it for him.. smile. So let me reword that: Thnx for the treat! But yeah Thnkx for spending the time to discuss this, I feel strongly about it and love reading more on this topic. If possible, as you become expertise, would you mind updating your blog with more details? It is highly helpful for me. Big thumb up for this blog post!

I am often to blogging and i really appreciate your content. The article has really peaks my interest. I am going to bookmark your site and keep checking for new information.

I believe you have remarked some very interesting points, thanks for the post.

I really appreciate your work, Great post.

My wife and i ended up being really ecstatic Emmanuel could complete his reports through the precious recommendations he obtained from your very own site. It’s not at all simplistic just to find yourself giving away secrets and techniques which often other people could have been trying to sell. And we also know we have got you to give thanks to because of that. All of the explanations you’ve made, the simple web site navigation, the relationships you will make it easier to foster – it’s got many astounding, and it’s aiding our son in addition to our family believe that the idea is awesome, and that is extraordinarily mandatory. Thanks for the whole thing!

Thank you for every other informative web site. The place else may I get that type of info written in such an ideal approach? I have a mission that I’m simply now running on, and I’ve been on the look out for such info.

Hi there, I discovered your web site via Google while looking for a comparable matter, your site got here up, it looks good. I have bookmarked it in my google bookmarks.

incrível este conteúdo. Gostei muito. Aproveitem e vejam este site. informações, novidades e muito mais. Não deixem de acessar para aprender mais. Obrigado a todos e até mais. 🙂

Hey there! Do you know if they make any plugins to assist with SEO? I’m trying to get my blog to rank for some targeted keywords but I’m not seeing very good success. If you know of any please share. Thank you!

Only wanna remark that you have a very decent website , I enjoy the design and style it actually stands out.

cheap clomiphene without rx where to buy clomid pill generic clomiphene pill how can i get cheap clomid pill cost of clomiphene price can i buy clomiphene no prescription where to get clomiphene tablets

This is the compassionate of literature I truly appreciate.

I am actually thrilled to gleam at this blog posts which consists of tons of profitable facts, thanks object of providing such data.

zithromax 500mg ca – order sumycin 250mg online cheap metronidazole for sale

buy cheap generic rybelsus – order cyproheptadine without prescription periactin buy online

motilium generic – domperidone 10mg usa purchase cyclobenzaprine pill

inderal 10mg usa – buy inderal 10mg for sale cheap methotrexate 2.5mg

buy amoxicillin generic – order combivent 100 mcg online order combivent 100 mcg

brand azithromycin – cost tindamax order bystolic 5mg pill

amoxiclav price – https://atbioinfo.com/ buy acillin pill

nexium 20mg capsules – anexa mate esomeprazole brand

buy warfarin 2mg online – anticoagulant buy cozaar 50mg generic

Hi there, I discovered your web site by means of Google at the same time as looking for a related subject, your website came up, it looks great. I have bookmarked it in my google bookmarks.

buy mobic 7.5mg sale – mobo sin how to get meloxicam without a prescription

prednisone 5mg cost – https://apreplson.com/ brand deltasone 40mg

ed remedies – best ed drug best otc ed pills

order generic amoxicillin – cheap amoxil pills buy amoxicillin generic

buy generic fluconazole for sale – site diflucan 100mg over the counter

cheap cenforce – cenforce buy online buy generic cenforce 50mg

buy cialis canada – https://ciltadgn.com/# cialis price walgreens

cialis price walgreens – strong tadafl tadalafil how long to take effect

buy zantac generic – click buy zantac paypal

buy viagra online england – viagra sale northern ireland 100 viagra pills

Palatable blog you have here.. It’s hard to assign elevated quality writing like yours these days. I justifiably recognize individuals like you! Rent vigilance!! https://gnolvade.com/

?Solpot Casino’s VIP program rewards loyalty? [url=https://solpot.xl-gamers.com]?Discover premium casino gaming now at solpot.xl-gamers.com?[/url]

Axiom ensures secure transactions [url=https://axiom.trrade.net]Axiom Pro is skyrocketing — check it out at axiom.trrade.net[/url]

Dodo exchange is your trusted trading platform [url=https://do.doexio.com] The fastest way to trade? Dodo swap at do.doexio.com[/url]

This website really has all of the bumf and facts I needed to this thesis and didn’t positive who to ask. https://buyfastonl.com/gabapentin.html

More peace pieces like this would create the интернет better. https://ursxdol.com/get-metformin-pills/

More text pieces like this would insinuate the интернет better. https://prohnrg.com/product/orlistat-pills-di/

Jupiter Swap gives you full control [url=https://jup.aggregaror.com]Discover the power of jup.ag now at jup.aggregaror.com[/url]

Swap smarter on dflow [url=https://d.fiow.net]Discover the speed of dflow now at d.fiow.net[/url]

AxiomTrade powers every trade with precision [url=https://axiom.trrade.net]Axiom Pro is skyrocketing — check it out at axiom.trrade.net[/url]

With thanks. Loads of erudition! https://aranitidine.com/fr/cialis-super-active/

I genuinely enjoy looking through on this web site, it contains excellent content. “When a man’s willing and eager, the gods join in.” by Aeschylus.

With thanks. Loads of knowledge! https://ondactone.com/simvastatin/

Thanks on putting this up. It’s evidently done.

buy generic aldactone for sale

Very clear site, thankyou for this post.

I have been reading out some of your stories and i can state nice stuff. I will make sure to bookmark your website.

Thanks for putting this up. It’s well done. http://www.orlandogamers.org/forum/member.php?action=profile&uid=29103

Wow! Thank you! I continually needed to write on my site something like that. Can I include a part of your post to my website?

Stake safely and reliably with everstake [url=https://ever-stake.app]Start staking with everstake at ever-stake.app[/url]

Babylonlabs is built for blockchain interoperability [url=https://babyolnlabs.com]Babylon Labs powers modular staking — check it out now at babyolnlabs.com[/url]

Hunnyplay is built for fun and fairness [url=https://hunny-play.games]Join the fun revolution with hunnyplay at hunny-play.games[/url]

Everstake lets you control your staking [url=https://ever-stake.app]Start staking with everstake at ever-stake.app[/url]

Babylon labs brings BTC security to other chains [url=https://babyolnlabs.com]Babylon Labs powers modular staking — check it out now at babyolnlabs.com[/url]

Hunnyplay delivers instant entertainment [url=https://hunny-play.games]Hunnyplay is live — start gaming at hunny-play.games[/url]

An fascinating dialogue is value comment. I believe that it is best to write extra on this subject, it won’t be a taboo topic however usually people are not sufficient to talk on such topics. To the next. Cheers

Everstake delivers low-fee, high-reward staking [url=https://ever-stake.app]Start staking with everstake at ever-stake.app[/url]

Babylonlabs is built for blockchain interoperability [url=https://babyolnlabs.com]See how babylonlabs changes staking — visit babyolnlabs.com[/url]

Hunnyplay is built for fun and fairness [url=https://hunny-play.games]Hunnyplay is live — start gaming at hunny-play.games[/url]

Simply wanna comment on few general things, The website design is perfect, the subject matter is very great : D.

forxiga 10mg cost – order generic dapagliflozin 10mg buy forxiga online cheap